Apache Spark: Revolutionizing Big Data Processing and Analytics

Introduction

Ever wondered how companies can process massive amounts of data in real-time? Apache Spark is the answer. As businesses increasingly rely on big data for decision-making and innovation, the need for fast, efficient, and scalable processing frameworks becomes critical. Apache Spark has emerged as a game-changer in this arena, offering unparalleled speed and versatility. This article explores why Apache Spark is revolutionizing big data processing, its key features, and how you can leverage its capabilities to drive your business forward. Whether you're a data scientist, IT professional, or business leader, understanding Apache Spark is essential for staying ahead in the data-driven world.

Body

Section 1: Provide Background or Context

What is Apache Spark?

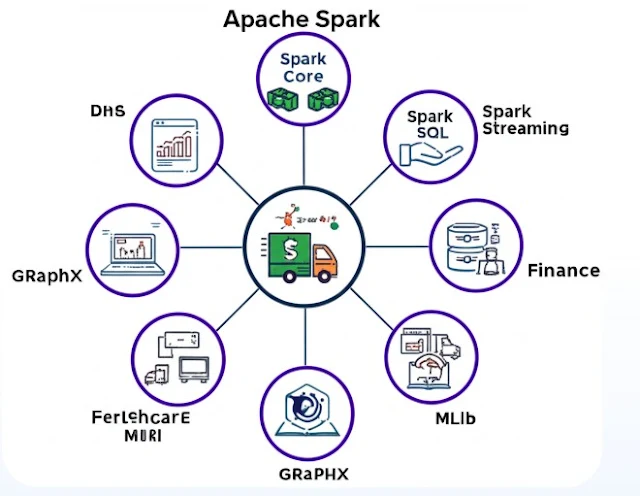

Apache Spark is an open-source unified analytics engine designed for large-scale data processing. Developed at UC Berkeley's AMPLab, Spark can handle both batch and stream processing, making it versatile for various big data applications. It supports multiple programming languages, including Java, Scala, Python, and R.

Evolution of Big Data Processing

Traditional data processing frameworks, like Hadoop, have limitations in terms of speed and real-time capabilities. Apache Spark was introduced to overcome these challenges, offering in-memory computation and faster processing times.

Importance in Big Data

With the explosion of data from various sources, businesses need efficient tools to process and analyze information quickly. Apache Spark's ability to handle large datasets in real-time makes it indispensable for big data applications.

Section 2: Highlight Key Points

Speed and Performance

One of the most significant advantages of Apache Spark is its speed. By utilizing in-memory computation, Spark can process data up to 100 times faster than Hadoop MapReduce. This speed is crucial for real-time analytics and quick decision-making.

Versatility

Apache Spark supports multiple workloads, including batch processing, interactive queries, machine learning, and graph processing. This versatility allows businesses to use a single framework for various data processing needs.

Ease of Use

Spark's support for multiple programming languages and its comprehensive APIs make it accessible to a broad range of users, from data scientists to software engineers.

Scalability

Apache Spark can scale horizontally, meaning you can add more nodes to handle increased data loads without modifying applications. This scalability is essential for growing businesses dealing with expanding datasets.

Real-World Applications

- Retail: Real-time inventory management and personalized marketing.

- Healthcare: Analyzing patient data for better diagnostics and treatment plans.

- Finance: Fraud detection and real-time risk analysis.

Studies and Data

A study by Databricks highlighted that companies using Apache Spark saw a 40% improvement in processing speed and a 30% reduction in infrastructure costs. Another research by Forrester emphasized Spark's role in enhancing data-driven decision-making capabilities.

Section 3: Offer Practical Tips, Steps, and Examples

Implementing Apache Spark

Setting Up Spark

- Install Apache Spark: Download and install Spark from the official Apache website.

- Configure Spark: Set up Spark configurations for optimal performance.

- Cluster Setup: Set up a Spark cluster using tools like Hadoop YARN, Mesos, or Kubernetes.

Data Processing with Spark

- Write Spark Programs: Develop applications using Spark's APIs in your preferred programming language.

- Run Jobs: Execute Spark jobs to process large datasets.

- Monitor Performance: Use Spark's built-in tools to monitor and optimize performance.

Case Study: XYZ Corporation

XYZ Corporation implemented Apache Spark to analyze customer behavior in real-time. By leveraging Spark's powerful processing capabilities, they personalized marketing strategies, leading to a 25% increase in customer engagement and a 15% boost in sales.

Best Practices

- Data Security: Implement robust security measures to protect sensitive data.

- Resource Management: Optimize resource allocation to ensure efficient processing.

- Regular Maintenance: Perform regular maintenance to keep the Spark cluster running smoothly.

Conclusion

Apache Spark is a game-changer in the world of big data processing. Its speed, versatility, ease of use, and scalability make it an essential tool for businesses looking to harness the power of data. By understanding and implementing Apache Spark, you can unlock valuable insights, drive innovation, and stay competitive in a data-driven landscape. Whether you're managing customer data, monitoring environmental changes, or predicting market trends, Apache Spark provides the foundation you need to succeed.

Comments

Post a Comment